Mesh Experience

ABOUT

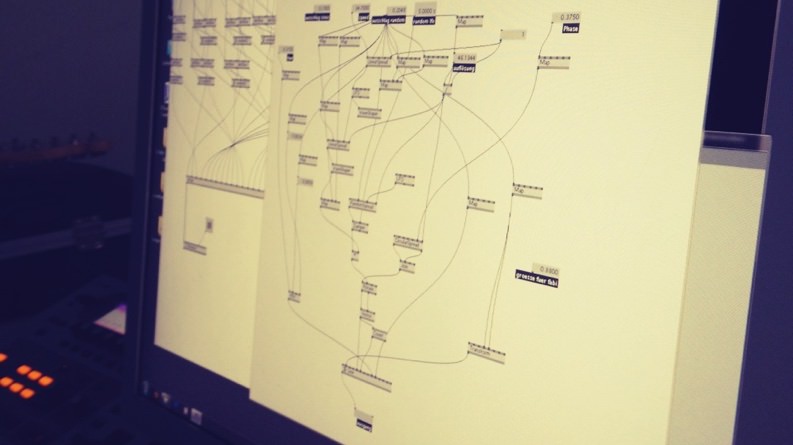

interactive guitar visualisation

Our primary aim was to create a visualisation that would make a musical performance legible. Also, we wanted to illustrate an approach for how live shows could benefit from visual effects that are interactive and generated by the musicians themselves.

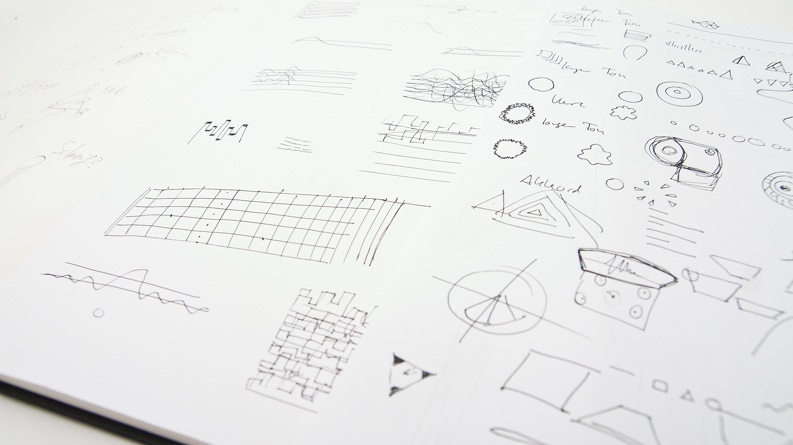

SKETCHING / VISUAL DESIGN / SPACIAL DESIGN / PROTOTYPING / PROGRAMMING / VIDEO PRODUCTION / PLAYING

All visualisations are a result of real-time sound processing and movement through space. No post production.

CONCEPT

enhancing the live performance

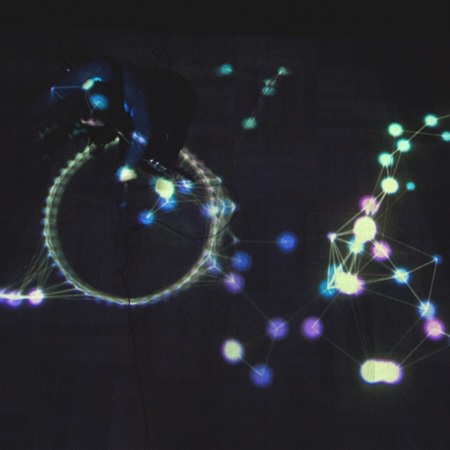

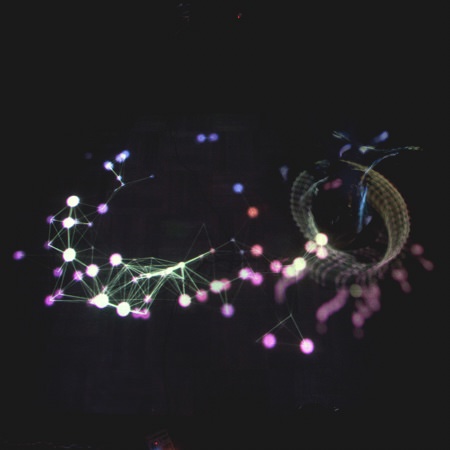

Mesh Experience is an audio visualisation that responds to guitar performances in real-time. According to the musical range of a guitar, the visualisation consists of 48 points, each one represents a tone and is identified by a position and a colour. Together the points form a circle which surrounds the performer, note for note.

When a note is being played, the respective point reacts, growing and moving from the musician, depending on the note’s velocity. According to the tone’s volume and intensity, the point remains at the highest amplitude, leaves a mark and disconnects from the circle or just reverts to its origin. Note bendings are expressed by a little twist of the circle.